New Frontiers in AI and Generative Art

The latest AI tools can generate images (or art?) from text prompts in ways that will blow your mind. Here's what's currently possible, why it's important, and how you can do it too.

The last time technology made me feel like I had superpowers was in 2008 when I bought my first iPhone. Having the actual internet (or “an iPod, a phone and an internet communicator”, as Steve Jobs would say) and not some dumbed down version in your pocket on a touchscreen (touch…what?) was astonishing. And really powerful.

The same thing happened to me with generative artificial intelligence (AI). It’s utterly fascinating and hard to believe if you haven’t tried it yourself. If you’re a creator, you really – I mean REALLY – need to get up to speed with this.

In an era when much of the tech industry seems to be down in the dumps, AI is experiencing a golden age.

I think this technology will change the game of art, design and creation (and possibly everything else too). It already does.

Everyone should try it.

Here’s a sneak peek of what’s currently possible: An image of Barack Obama based on the following input and stylized with a few iterations:

Barack Obama, face, emotional, highly detailed, photorealistic, tattoo, wrinkles, beard

Wow. Who said the future wasn’t exciting?

I created the image above with a tool called Midjourney, an independent research lab “expanding the imaginative powers of the human species”. Sounds good, right?

They have started alpha testing Midjourney V4, a much more powerful version than its predecessor. You can sign up as a beta user and start creating as of now. More on that below.

Midjourney is currently one of three popular generative AI tools for images (besides DALL-E (OpenAI) and Stable Diffusion). Here’s a helpful comparison between the three.

Let’s see Midjourney in action.

Midjourney V4 in action

I created all the images below within a day of first using Midjourney. Most of them needed a few tweaks and iterations to get just right. I was especially interested in photorealistic portraits that trigger similar emotions as real photographs. Let’s go!

/imagine…

…an abstract, expressionistic oil painting:

abstract, oil painting, expressionism, neon colors, white space, red dots

… or an old, wise man, scarred by life, washed with all waters, worried and deeply charismatic:

old man, face, eyes, fear, wrinkles, grey hair, beard, highly detailed, photorealistic, tattoo, on the brink of bailout

…and here is his friend:

… a close-up of a beautiful, confident women in her thirties with brown hair:

face, female, confident, 30 years old, beautiful, brown hair, highly detailed, photorealistic

AI seems to know what “beauty” is, at it seems.

… or a supermodel, like Kate Moss?

kate moss, open skin shoulders, smoking, studio lightning, contour light, uniform dark background, photographic detail, f/2, focal length 70mm

… or a studio portrait shot of Pablo Picasso?

pablo picasso, skin, natural, contour light, studio lightning, uniform dark background, photographic detail, f/2, focal length 70mm --v 4 --upbeta

… or a historical figure like Marcus Aurelius.

(As I’m reading his book “Meditations” and was wondering how the face of that stoic genius looks like)

marcus aurelius, close-up, face, emotional, eyes, highly detailed, photorealistic, rome

You can’t fake that emotion! Wow. Maybe he looks at “a severed hand or foot, or a decapitated head” during the Marcomannic Wars, as he writes in Meditations.

… or a deeply emotional Barack Obama, just the moment after he publicly confessed that continuing the war in Iraq was a mistake:

Barack Obama, face, emotional, highly detailed, photorealistic, tattoo, wrinkles, beard

Interesting hint: The input for the two images of Barack Obama are the same. Through tweaking and iteration, I got to different results.

… or more abstract concepts like this dystopian New York (or Gotham City)?

glowing ball, dark city, sky scrapers, matrix, stars, sky, apocalypse, new york, myst, photorealistic, highly detailed

I wonder whether the reflections are correctly displayed. And where is Batman?

… what about Donald Trump and Joker shaking hands in New York? No problem!

… what about a close-up of Donald Trump? Done.

donald trump, skin, natural, contour light, studio lightning, uniform dark background, photographic detail, f/2, focal length 70mm --v 4 --upbeta

…or ever wondered how George Orwell would walk around today and watch his 1984 dystopian future with horror? Here he is, most personally.

george orwell, close up, watching a dystopian future, big brother behind him, screens and cameras, rain, crowds, dark, gin, photorealistic, detailed, 8k

… or paradise?

jungle, lush nature, flowers, rainforest, animals, butterflies, snakes, insects, life, thriving, green, paradise, photorealistic, highly detailed

Adam and Eva are still missing, I guess. But look at the details of the wing of the butterfly. Stunning.

… what about Cleopatra, Queen and active ruler of the Ptolemaic Kingdom of Egypt from 51 to 30 BC?

… or to get to the bottom of it: God himself?

God

Note: AI automatically depicts God as male. I’m wondering were it got that from?

It grasps our understanding of beauty, biblical history, concepts from books, emotions, ancient figures,… If that doesn’t blow you away, what will?

The tool still has obvious flaws though. It struggles to understand abstract inputs, such as complex human emotions, philosophical concepts, etc. This would be hard for a human to visualize too. I also noticed that the same input doesn't produce the same result.

I subsequently minted some of the creations above and many more as NFTs. Curious what humanity looks like? Or the end of history? You can find them here.

Midjourney discourages people from minting NFTs by requiring that anyone using generated images in “anything related to blockchain technologies” pay a 20 percent royalty on any revenue over $20,000 per month.

How you can do it

Here’s a short step-by-step guide on how to use Midjourney V4:

Sign up for Discord

Subscribe to the Midjourney discord channel

Navigate to one of the “newcomer rooms”

Write “/settings” into the chat window and press enter

Toggle “MJ Version 4”

Start creating by typing “/imagine” followed by a prompt into the chat window

Press enter and wait for the first result

V1-V4 let you create variations of one of the four proposed pictures (V1 top left, V2 top right, V3 bottom left, V4 bottom right), U1-U4 upscales your result.

More details can be found in the quick start guide here.

Midjourney currently proposes 4 different images based on your inputs. It gives you a very narrow set of options to stylize it further. Each step takes about a minute to complete. The input - output command on Discord is clunky and relatively primitive.

The future is faster than you think

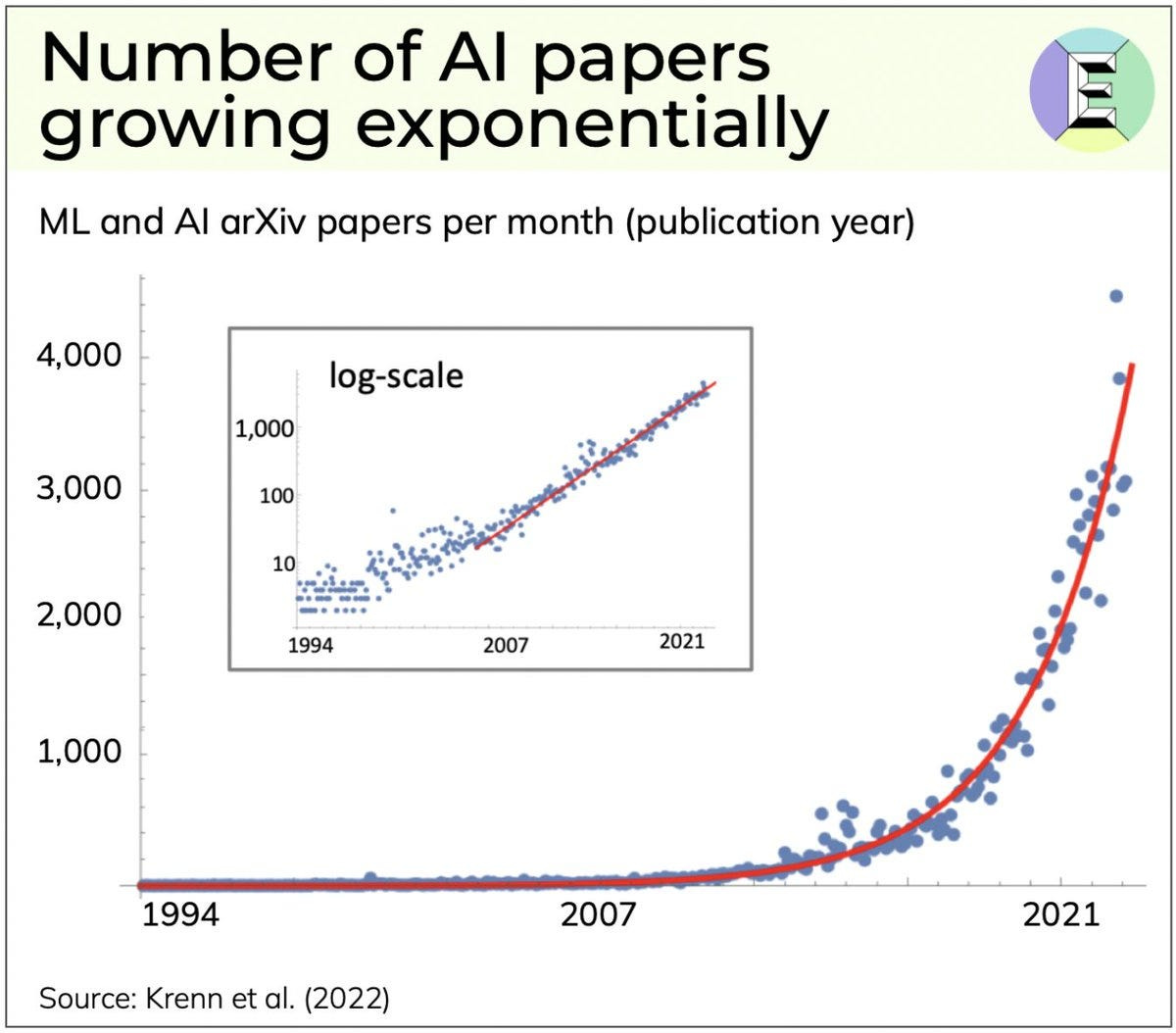

We are truly at the beginning of an era. This is a massive paradigm shift that's coming much more quickly than most of us expected. AI is taking huge leaps forward almost on a monthly basis. The whole industry is booming, particularly in Silicon Valley. Midjourney V2 was released in April 2022. Testing of V3 started in July 2022, V4 in November 2022. And the leaps are massive. Each iteration offers greater detail and better compositions from simple prompts. AI language and art models are growing at 10x a year, beyond Moore's Law pace.1

Let’s step back for a moment and imagine what’s possible if these tools become 10x or 100x more powerful and quicker (which they will, soon). Just watch that interview with Sam Altman, CEO of OpenAI, to get a grasp of what’s coming.

Imagine that pictures can no longer be distinguished from photographs.

Imagine editing in real time (not one minute per step). That’s about as fast as we use Photoshop filters or Lightroom corrections today.

Imagine an AI tool with unlimited understanding of inputs (not narrowed down to five).

Imagine that this technology will merge with augmented reality and virtual reality, from static images to moving images. How about creating 3D worlds in real time and being in them? Or movies?

Imagine that we will imagine these worlds with brain-machine interfaces (and not with a string of primitive words). That we will be able to imagine what we imagine?

/imagine becomes limitless. The whole universe will be in your hands.

Will that be the “Metaverse” everyone is talking about? Who knows.2

New questions and opportunities

The more amazed I am by this new technology, the more questions arise:

How are these AI tools trained? Who decides what's good and evil? How do we make sure they don’t discriminate? How do we avoid biases3

How do we value (and reevaluate) human creativity?

Is AI generated “art” art, or is it plagiarism? What is art? Can computers create art? And who’s the artist? (apparently, our standards for great art are not objective)

Is an AI algorithm allowed to be trained based on existing art/images, or could that be copyright infringement?

What does AI mean for generative art?

How do we protect ourselves from impersonations and fake news?

What’s real and what’s not?

… etc.

I feel that we are unprepared for this in so many ways.

Meanwhile, this opens new opportunities for creators and brands. Some ideas:

Used in the right way, artists and creators could significantly enhance creative expression

Hyper-personalization of advertisement visuals and visual brand experiences (in the metaverse?) based on the preferences of individual users and clients could become possible

Drastic cost reductions by automating the creation of (generic) brand assets & creatives

Co-creation could become even more expressive and fun

…etc.

That’s it. Lot’s still to uncover.

– Marc

Next steps:

Check out this Tweet for some further fascinating AI tools

Check out my NFT gallery of the best creations for some inspiration

Check out Midjourney

Check out DALL-E: https://openai.com/dall-e-2/

Check out the OpenAI Playground: https://beta.openai.com/playground

Check out what you can do with text:

https://chat.openai.com/chat

Check out the community showcase of Midjourney: https://www.midjourney.com/app/feed/all/

Watch the interview with Sam Altman, CEO of OpenAI.

Further reading & sources:

Roose, K. (2022, October 21). A coming-out party for Generative A.I., Silicon Valley's new craze. The New York Times. Retrieved November 28, 2022, from https://www.nytimes.com/2022/10/21/technology/generative-ai.html

Heikkilä, M. (2022, November 23). This artist is dominating AI-generated art. and he's not happy about it. MIT Technology Review. Retrieved November 28, 2022, from https://www.technologyreview.com/2022/09/16/1059598/this-artist-is-dominating-ai-generated-art-and-hes-not-happy-about-it/

Hertzmann, A. (2018, May). Can computers create art?. In Arts (Vol. 7, No. 2, p. 18). MDPI. https://arxiv.org/pdf/1801.04486.pdf

Batycka, D. (2022, November 26). What does the rise of A.I. models mean for the field of generative art? NFT artists and curators weigh in. Artnet News. Retrieved November 28, 2022, from https://news.artnet.com/market/future-of-generative-art-ai-models-erick-calderon-george-bak-dmitri-cherniak-2207167

In 1998, Yann LeCun's breakthrough neural network, LeNet, contained 60,000 parameters (measures the machine's capability to do useful things). After 20 years, OpenAI produced a version of GPT with 110 million parameters. GPT-2 has 1.75 billion and GPT-3, now two years old, has 175 billion. More parameters mean better results. Multimodal networks, which combine text with images, text with text or any combination of the three, are even more complex. The biggest are approaching 10 trillion parameters.

I will write more about the Metaverse in a separate post.

Midjourney hasn’t published any information about what datasets and methods were used to train its AI tool, and doesn’t seem to have many explicit content protections aside from automatically blocking certain keywords.