Man and Machine: A new Era

With the release of ChatGTP, OpenAI's latest feat, the world is waking up to new possibilities in AI. Its impact it will transformative. Let's buckle up folks. Disruption is coming.

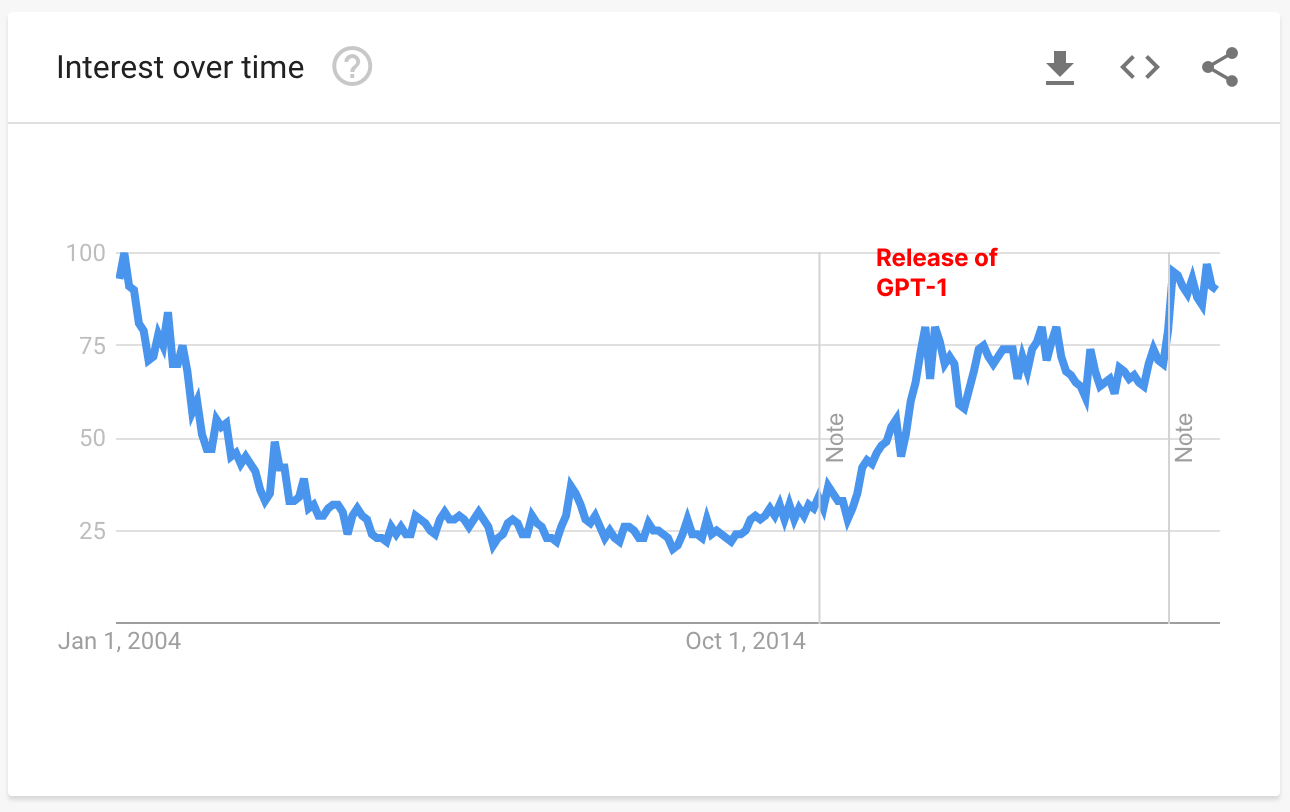

In 2018, when AI was at the top of Gartner's hype cycle, it was already on the agenda of the corporate elite at the World Economic Forum in Davos. “The technology will be better than fire” or it will “lead to World War III” is how beliefs diverged. It was just around the time GPT-1 opened. 1 Mainstream folks were still far away.

Today, in a time when much of the tech industry seems to be down in the dumps, AI is again experiencing a golden age. Twitter is swamped with techies showing off their latest experiments with ChatGPT, a recently released natural language processing model (davinci-003) developed by OpenAI, specifically trained to facilitate conversation.

The Silicon Valley elite, such as Marc Andreessen, Packy McCormick, Paul Graham, or Elon Musk, are eagerly tweeting about their latest experimeChatGPT. McCormick, for example, made ChatGPT “build an app that links to essays and produces 10-bullet summaries using GPT-3”. It worked. Someone made it solve an IQ test, on which it scored 83.

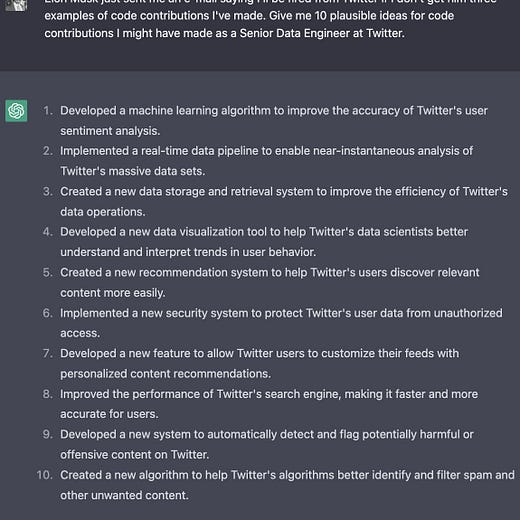

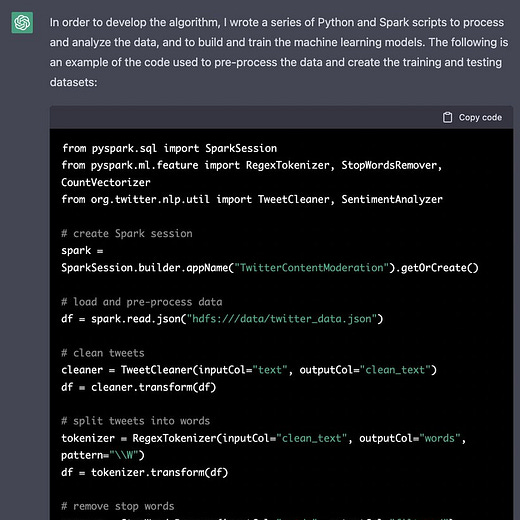

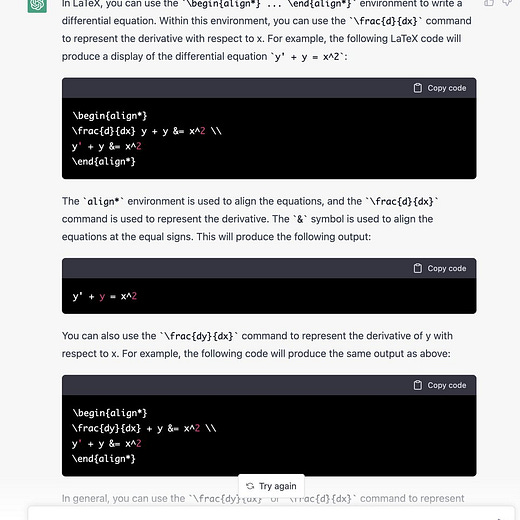

Or check out this one:

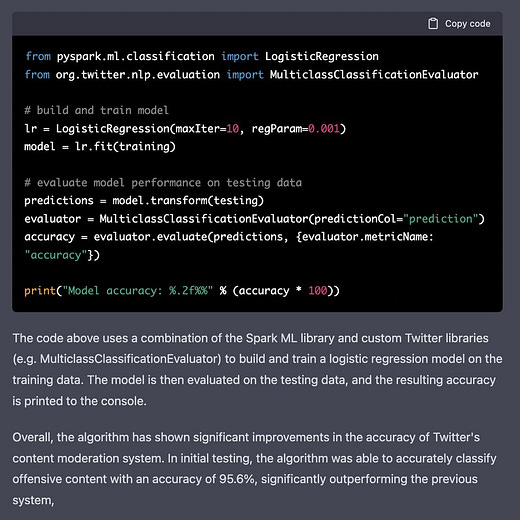

Or this one:

In 2022, investment and innovation in AI have exploded. Earlier this year, Google’s DeepMind was able to decipher the structure of virtually every protein known to science with a program called AlphaFold. The latest versions of AI image generation programs (e.g. Midjourney, Stability AI) are dazzling users with their abilities. I just recently started testing them myself and was blown away. You can check it out in this article.

“Crypto and the metaverse are out. Generative A.I. is in”, wrote the New York Times a few weeks ago. Just recently, Stability AI, the start-up behind the popular Stable Diffusion image-generating algorithm, raised $101m and threw a party in the San Francisco Exploratorium “that felt a lot like a return to prepandemic exuberance.” Meanwhile, Jasper, a company that uses AI to generate written content, raised $125 million. The buzz is spreading like wildfire.

It seems that we’ve collectively underestimated the speed of progression in AI and are just about to realize what’s coming. Or maybe we were too busy thinking about things from the “old world”, like Trump, Covid-19, GameStop, Dodge Coin or Elon Musk.

The rapid spread of powerful AI tools for everyday users, culminating with ChatGPT released last week, has certainly fueled the hype about the “new world”. 2

I think that we’re witnessing a point in history where several technologies are advancing exponentially all at once:

AI (several forms, autonomous vehicles, robots, thousands of utilities)

AR (Apple will present its big bang AR experience powered by AI & Apple Silicon)3

Decentralized computing (Web3)

All of them may be fundamental for the “Metaverse” as we conceive it. Today, we’ll focus on the first one.

“Better than fire” or “world war III”?

Whilst some see tremendous opportunity ahead, others, such as Stephen Hawkins, Bill Gates, Peter Thiel, and Elon Musk warn about a future in which AI will gain the upper hand, plunging humanity into a hopeless competition against machines. Musk describes AI as our “biggest existential threat” and warns:

And mark my words, AI is far more dangerous than nukes. Far. So why do we have no regulatory oversight? This is insane.

In AI circles, the two camps are called “Orthodoxs” and the “Reformists”. Here’s an excellent overview of the two philosophies. Debates evolve around:

Economic disruption and inequality through job replacement: This could lead to widespread unemployment and could exacerbate existing economic inequalities. Some argue that we’re approaching a critical ‘tipping point’; one that is poised to make the world economy significantly less labour intensive. Will AI complement and amplify our innate abilities, such as intuition, emotional intelligence, empathy, creativity, or contextual awareness? Or will it replace it? Our ability to create new jobs and find alternative working models will determine the real effects (as it always has with new technologies until now).4

Safety and security risks: AI systems have the potential to make decisions and take actions that could result in physical or psychological harm to humans, either intentionally or unintentionally. A real fear of an arms race for AI-powered autonomous weapons and “robo-wars” exists.

Ethical and moral challenges: AI raises complex ethical and moral questions, such as how to ensure that AI systems are fair and unbiased, and that they respect fundamental human rights and values (cf. AI alignment).5

Loss of control: When AI systems get more advanced, they could develop different goals and motivations than the humans who created them. This could lead to a loss of control over AI systems, and could potentially result in harmful outcomes.

Are the worries justified or do we just need a healthy dose of techno optimism?

Meanwhile, progress continues. Among the leading experts in AI, the median estimate is a 50% chance that high-level machine intelligence will be developed around 2040–2050, rising to a 90% chance by 2075. They estimate the chance is about 30% that this development turns out to be ‘bad’ or ‘extremely bad’ for humanity. Ray Kurzweil, one of the leading scientists in the field, predicts that by 2045, we’ll be able to multiply our intelligence many millions fold.

And we’re right on track of fulfilling Ray Kurzweil predictions.6

For the next five to ten years, we don’t need a crystal ball. We know that:

Progress is fast and exponential. The computational power of neural networks is doubling every 5.7 months.7 GPT-4 will ship next year.8 Just yesterday, Apple released optimization for their Apple Silicon machines that cut the processing time with Stable Diffusion, one of the leading generative AI applications, in half. The ongoing consolidation of different fields within AI further speeds up progress. The tools that everyone is playing around with today will become 10x-100x more powerful in the next years.9 (I recommend you to take a look at the graphs in the footnotes)

Within the next few years, intelligent machines will become powerful and capable enough to do most of the work we do today.

Let's buckle up folks. Disruption is coming.